The Present Home Lab

Ole’ Faithful! I know it’s only been up and running for about 2 1/2 years but outside of a single issue I have not had any issues with the box. But I know long term this isn’t a viable solution. After upgrade the fans with Noctua’s and a dedicated UPS the unit has not changed much since I originally built it. The final cost for this server was just over $3,500 which is quite high.

So, where did I go wrong with this build? Well, if this server was just for ad-hoc development and testing, nothing. The issue arised when I started putting workloads I needed operational 24/7. These workloads were a NAS, Team City, Build Agents, DNS and a beefier desktop VM for Slicing models for 3D printing.

So, where did I go wrong with this build? Well, if this server was just for ad-hoc development and testing, nothing. The issue arised when I started putting workloads I needed operational 24/7. These workloads were a NAS, Team City, Build Agents, DNS and a beefier desktop VM for Slicing models for 3D printing.

I originally provisioned the unit as a RAID 6, meaning I could loose 2 drives and have the unit still be operational. This was the all SysAdmin in me, falling back to RAID 5 or 6. The issue with both RAID 5 and 6 is the Mean Time between Unrecoverable Read Errors (ye old Bad Sectors). Back when I SysAdmin’ed in a day to day basis, we didn’t have 1TB drives.

The issues with RAID 5 and 6 occur when you have drives over 1TB in the system and need to do a rebuild (replace the failed drive). If your using Consumer HDD’s you probably have a bit error rate of around 10^14. Enterprise HDD’s have an error rate of 10^15 or better.

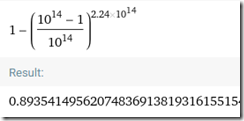

Using the formula Probability = 1-((X-1)/X)^R I can calculate the probability of having another drive fail during a rebuild.

For my 8 WD Black 4TB, suffering 1 failed disk, what is the probably of another failure during a rebuild

89% fam, now RAID 6 I could suffer another failure and the probably would go down a bit, but were talking another failure probably of 85%. For experience when I added more drives into my RAID 6 array it took about 7 days for it to rebuild. Getting a unrecoverable read error in normal day to day operation is fine, you might have a corrupt file, a picture might be off, etc. Most file formats can deal with that easily, but during a rebuild you SOL.

So a major issue is my choice of RAID, next issue is that in the even of a disk failure I have no easy way to identity which drive failed or even get at it without turning the server off, pulling it out and cracking the case. The lack of hot swap drive bay hinders your ability to quickly identify failed drives and replace them.

Another issue with the setup is the lack of redundant power supplies. with only 1 PSU in the case if it goes, again SOL. So for me, I need a single server build with redundant PSU’s and hot swappable drive bays. This would allow for you to keep a server up much easier and keep mission critical operations on it.

With my next setup I’m intending to some production loads on it as well, so I really need to invest in server grade kit this time around. Next post I’ll go into my new setup design, how I’m going about it and dealing with issues to make it highly available.

I’m the Founder of Resgrid an open source computer aided dispatch (CAD) solution for First Responders, Industrial and Business environments. If you or someone you know is part of a first responder organization like volunteer fire departments, career fire departments, EMS, search and rescue, CERT, public safety or disaster relief organizations check Resgrid out!